This article also appeared on Tech Crunch

There was a time when Stanford University was considered a second-rate engineering school. It was the early 1940s, and the Department of Defense was pressed to assemble a top-secret team to understand and attack Germany’s radar system during World War II.

The head of the U.S. scientific research, Vannevar Bush, wanted the country’s finest radio engineer, Stanford’s Frederick Terman, to lead 800 researchers on this secret mission. But instead of basing the team at Terman’s own Stanford lab — a mere attic with a leaky roof — he was sent to the acclaimed Harvard lab to run the mission.

It’s hard to imagine Stanford passed over as an innovation hub today. Stanford has outpaced some of the biggest Ivy League universities in prestige and popularity. It has obliterated the traditional mindset that eliteness is exclusive to the Ivy League. Stanford has lapped top schools by centuries. It ranks in the top 3 in multiple global and national rankings (here, hereand here).

Plus, survey results point to Stanford as the No. 1 choice of most students and parents for the last few years, over Harvard, Princeton and Yale. In fact, even Harvard students haveacknowledged Stanford’s notable rise in popularity.

But something a little more intriguing is happening on Stanford’s campus…something that goes beyond these academic rankings. Since the beginning of time, the goal of academia has been not to create companies, but to advance knowledge for the sake of knowledge.

Yet Stanford’s engineering school has had a strong hand in building the tech boom that surrounds it today. It’s not only witnessed, but also notoriously housed, some of the most celebrated innovations in Silicon Valley.

While Stanford faculty and students have made notable achievements across disciplines, their role in shaping the epicenter of The Age of Innovation is perhaps one of the top — if not the most unique — distinguishers. As the world’s eyes fixate on the booming tech scene in Silicon Valley, Stanford’s affiliation shines brightly in the periphery.

In return, its entrepreneurial alumni offer among the most generous endowments to the university, breaking the record as the first university to add more than $1 billion in a single year. Stanford shares a relationship with Silicon Valley unlike any other university on the planet, chartering a self-perpetuating cycle of innovation.

But what’s at the root of this interdependency, and how long can it last in the rapidly shifting space of education technology?

Fred Terman, The Root Of Stanford’s Entrepreneurial Spirit

To truly understand Stanford’s role in building Silicon Valley, let’s revisit WWII and meet Terman. As the leader of the top-secret military mission, Terman was privy to the most cutting-edge, and exclusive, electronics research in his field. While the government was eager to invest more in electronics defense technology, he saw that Stanford was falling behind.

“War research which is [now] secret will be the basis of postwar industrial expansion in electronics…Stanford has a chance to achieve a position in the West somewhat analogous to that of Harvard of the East,” Terman predicted in a letter to a colleague.

After the war, he lured some of the best students and faculty to Stanford in the barren West by securing sponsored projects that helped strengthen Stanford’s reputation in electronics. Here’s a great visualization, thanks to Steve Blank, about how Stanford first fueled its entrepreneurship engine through war funds:

This focus on pushing colleagues and students to commercialize their ideas helped jumpstart engineering at Stanford. Eventually, Stanford’s reputation grew to becoming a military technology resource, right up there with Harvard and MIT.

But Terman’s advocacy of technology commercialization went beyond the military. As the Cold War began, Terman pushed to build the Stanford Industrial Park, a place reserved for private, cutting-edge tech companies to lease land. It was the first of its kind, and famously housed early tech pioneers like Lockheed, Fairchild, Xerox and General Electric.

The research park was the perfect recipe for:

- A new revenue stream for the university

- Bringing academic and industry minds together in one space

- Inspiring students to start their own companies

You might say that the Stanford Industrial Park was the original networking hub for some of the brightest minds of technology, merging academia and industry, with the goal of advancing tech knowledge.

They had a harmonic relationship in which industry folks took part-time courses at Stanford. In return, these tech companies offered great job opportunities for Stanford grads.

Since then, Stanford’s bridge from the university to the tech industry has been cast-iron strong, notorious for inspiring an entrepreneurial spirit in many students. The most famous story, of course, is that of Terman and his mentees William Hewlett and David Packard, who patented an innovative audio oscillator. Terman pushed the duo to take their breakthrough commercial.

Eventually, Hewlett-Packard (HP) was born and moved into the research park as the biggest PC manufacturer in the world. To date, he and the late David Packard, together with their family foundations and company, have given more than $300 million to Stanford.

Because of their proximity to top innovations, Stanford academics had the opportunity to spot technological shifts in the industry and capitalize by inventing new research breakthroughs. For instance:

- Computer Graphics Inc.: Students were enamored by the possibility of integrated circuit technology and VLSI capability. The Geometry Engine, the core innovation behind computer generated graphics, was developed on the Stanford campus.

- Atheros: Atheros introduced the first wireless network. Teresa Meng built, with government funding, a low-power GPS system with extended battery life for soldiers. This led to the successful low-power wireless network, which eventually became Wi-Fi.

These are just a few of some of the most groundbreaking technological innovations sprouted from Stanford soil: Google, Sun Microsystems, Yahoo!, Cisco, Intuit … and the list goes on — to more than 40,000 companies.

Stanford also has a reputation as a go-to pool for talent. For instance, the first 100 Googlers were also Stanford students. And today, 1 in 20 Googlers hail from Stanford.

Proximity to Silicon Valley Drives its Tech Entrepreneurial Spirit

If you stroll along the 700 acres of Stanford’s Research Park, not only will you see cutting-edge companies like Tesla and Skype, but also world-renowned tech law firms and R&D labs. It’s a sprawling network of innovation in the purest sense of the term — it’s the best place to uproot a nascent idea.

Proximity to Silicon Valley is not the most important thing that distinguishes Stanford, but it’s certainly the most unique. It’s the hotbed of computer science innovators, deep-pocketed venture capital firms and angel investors.

At least today, everyone who wants to “make it” in tech is going to Silicon Valley. And — just like Terman’s early Stanford days — it’s where you can meet the right people with the right resources who can help you turn the American entrepreneurial dream into a reality.

Just look at the increasing number of H-1B visa applicants each year, most of whom work in tech. There were more than 230,000 applicants in 2015, up from 170,000 in 2014. Four out of the top 11 cities that house the most H-1B visa holders are all in Silicon Valley.

Plus, an increasing number of non-tech companies are setting up R&D shops in Silicon Valley. Analyst Brian Solis recently led a survey of more than 200 non-tech companies; 61 percent of those had a presence in Silicon Valley, which helped them “gain access and exposure to the latest technology.”

There’s certainly a robust emphasis on technology entrepreneurship penetrating the campus of Stanford engineers.

Still, opponents often point to media exaggeration that reduce Stanford into a startup-generator. Of course, Stanford’s prestigious curriculum is a draw for top faculty and research across disciplines. But, given the evidence and anecdotes, there’s certainly a robust emphasis on technology entrepreneurship penetrating the campus of Stanford engineers. How can it not?

Michael Harris, a Stanford alumnus, can attest to a general sense of drive and passion. “It’s not quite as dominant as the media makes it seem,” he said, “but there’s some element of truth.”

Stanford students are by and large interested in creating real things that have a real effect in the world. The fact that Silicon Valley is right here and students have fairly good access through friends, professors, the school, etc. to people in the industry is definitely a big bonus. It gets people excited about doing work in the tech industry and feeling motivated and empowered to start something themselves.

This Entrepreneurial Spirit Is Evolving Into A Sense Of Urgency

Terman’s early emphasis on turning the ideas developed in academia into viable products is just as — if not more — rampant today. The most telling evidence is that Stanford’s campus is producing more tech startup founders than any other campus.

But what’s even more curious is that some students, particularly in the graduate department, don’t even finish their degrees. It’s monumental to pay thousands of dollars for a master’s degree in computer science, only to leave to launch a startup. Even at the undergrad level, Harris thought about leaving college after doing one amazing internship the summer after his junior year.

“I will say that working in industry teaches you more things faster about doing good work in industry than school does by a really big margin (order of magnitude maybe),” Harris said, “so I don’t actually think it’s crazy for people not to go back to school other than the fact that some companies seem to think it’s important for someone to have a piece of paper that says they graduated from college.”

Of course, most people do finish their degrees. But this sense of urgency to leave — whether or not the majority follow through — is palpable.

Last year, six Stanford students quitschool to work at the same startup.Another 20 left for similar reasons the year before that. Apparently, Stanford’s coveted StartX program wasn’t enough for them.

StartX is an exclusive 3-month incubator program to help meet the demand for students who want to take their business ideas to the market — complete with renowned mentorship and support from faculty and industry experts to help the brightest Stanfordites turn their ideas into a reality.

In a recent talk, Stanford President John Hennessy proudly spoke about this program as a launchpad for students to scratch their itch for entrepreneurship. But when an audience member asked him about students dropping out of school, he said, “Look, for every one Instagram success, there are another 100 failed photo-sharing sites.” And, he added, “So far, all of the StartX program students have graduated — at least all of the undergrads.”

Generally, Stanford’s graduation rates have dipped somewhat in recent years. Of students who enrolled in 2009, 90 percent had graduated within 5 years, Stanford said, compared with a 5-year graduation rate of 92.2 percent 5 years earlier. And this is not a bad thing for Stanford. Since the very beginning, a core function of Stanford’s excellence is its investment in its students to build great commercial products — starting with the early days of Terman.

The Future: What Will Stanford Be Without Silicon Valley?

But both education and the Valley are shifting. The very nature of innovation frees us from brick-and-mortar walls of elite institutions and companies.

If the best application of technology is to democratize opportunity, then every single person on the planet should have affordable access to Stanford’s world-class education online. The rise of Massive Open Online Courses (MOOC) and online resources are an indication of the future of education.

It’s a future in which ambitious students have the opportunity to educate themselves. At the forefront of technology, educational institutions, including Stanford, are starting to decentralize the model through online course material.

Stanford shares a relationship with Silicon Valley unlike any other university on the planet.

Meanwhile, Silicon Valley may have pioneered the tech boom, but it’s no longer the only tech hub. Bursts of technological hubs are forming all over the world. In a piece on the H-1B visa cap, I found that the top investors in early stage startups have set up shop in India, China and Israel, three of the largest global tech hubs after Silicon Valley.

Realistically, the H-1B visa cap and city infrastructure can’t practically support exponential growth in Silicon Valley. The nucleus of innovation will eventually shift, deeming the proximity to Silicon Valley irrelevant.

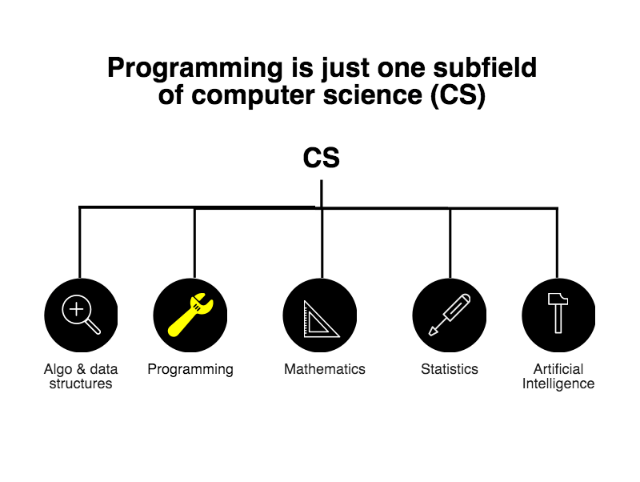

Plus — as some students aren’t even finishing their degrees — it’ll be worth re-evaluating if thousands of dollars for a master’s in CS at Stanford is really worth the brand name on a resume or access to coffee with top startup founders who happen to reside in Palo Alto.

But if Stanford’s proximity to Silicon Valley drives its entrepreneurial essence, which helps bolster both the reputation and funding of Stanford, what will happen when the ambitious, startup founders at Stanford start getting their education online?

Will Stanford end up disrupting the very unique factor that distinguishes Stanford from any other university on the planet? Or will Stanford’s alumni continue to fuel its self-perpetuating cycle of innovation and maintain its reputation as an innovation hub?